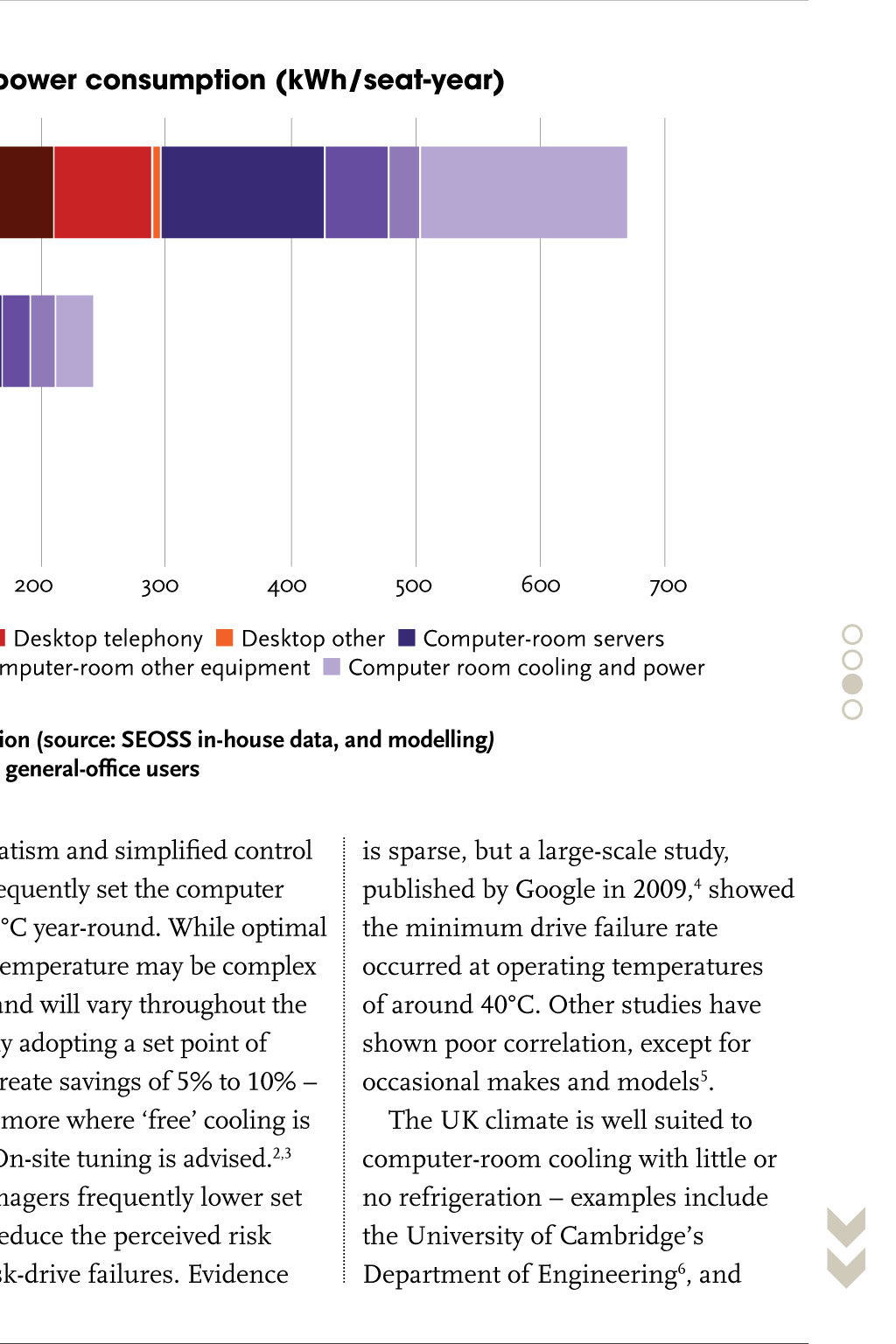

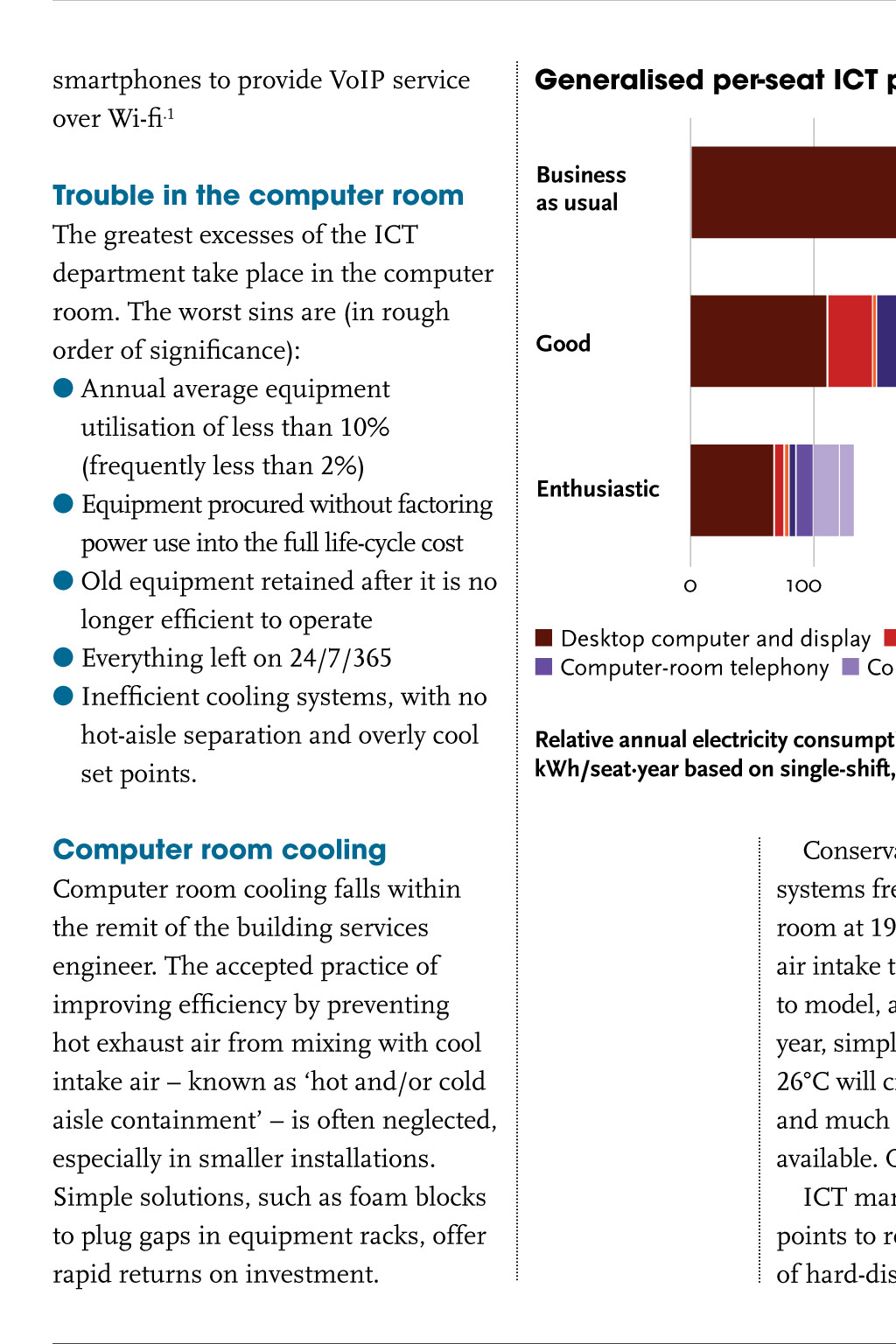

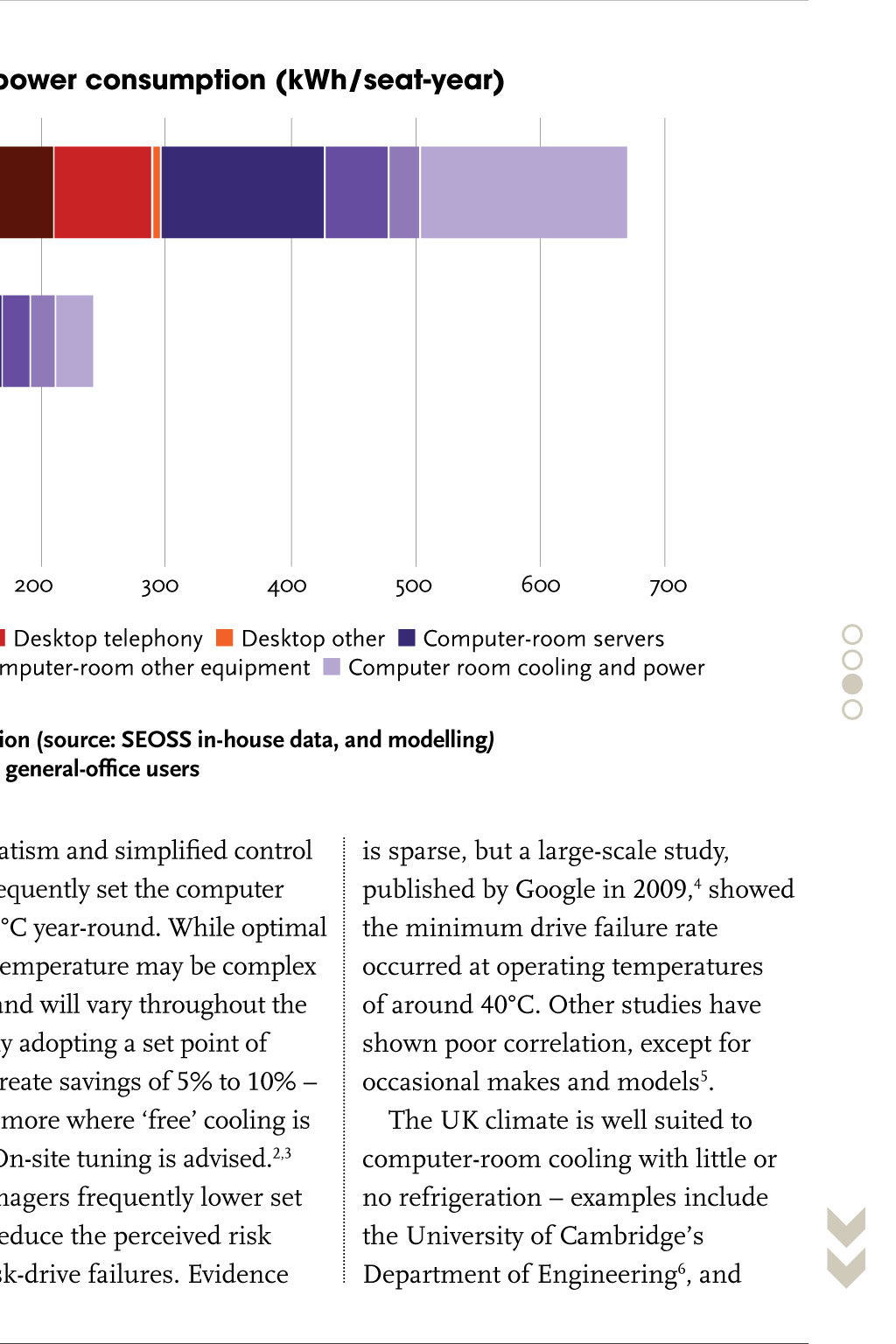

BEST PRACTICE ITC SPECIFICATION UP IN THE CLOUD Put simply, the cloud means outsourcing renting time on computers located elsewhere. A common analogy is the electricity grid: only a few organisations find it economical to operate off the grid. The analogy is not perfect, however, and many organisations may not yet be able to make significant use of cloud computing. Where viable, the cloud can greatly reduce sinful goings on in the computer room in some cases, most onsite equipment can be dispensed with. This doesnt simply amount to moving the problem elsewhere, because doing ICT extremely efficiently is central to cloud computing providers business models. Fortunately, many of the best tricks of cloud providers are also available to smaller ICT users. CHIP SIN Poor specification of ICT systems can undo all the good design work that goes into a low-energy space. Tim Small explains how to avoid the pitfalls A s the energy performance of buildings improves, unregulated loads the equipment brought in by occupiers, and the services required to support them can easily account for the majority of the carbon footprint. Information and communications technologies (ICT) can be a large part of this, and The Woodland Trusts new headquarters, in Grantham, is a good example. (See Trees of Knowledge, CIBSE Journal, October 2014 issue.) Despite the building services team specifying thin client terminals, the ICT electricity use was still higher than predicted. Building designers have often regarded information provided on such systems as a given either to an industry standard, to the requirements of the client, or, their ICT service providers. However, it is increasingly clear that requirements need to be challenged for two reasons: first, the ICT experts may not be used to giving much attention to its energy efficiency and, second, if all aspects of building energy use are not considered at the time of design or alteration, the decisions made may be unbalanced. Traditionally, ICT sales were dominated by desktop PCs. IT procurement rarely factored in lifecycle energy usage the bill usually came out of someone elses budget, as did the costs of large cooling systems, or poor thermal comfort. In the past five years, smartphones, laptop computers and tablets have come to dominate the market, and their sales now outstrip desktop PCs by more than 10:1, so R&D effort has switched to extending battery life. Some of the resulting power-saving techniques have become widespread across desktop and server computers energy use when idle is now commonly 30% lower. The computers that offer the highest compute performance (for a given capital cost) are also the most powerhungry. In the past, all equipment would cluster around the maximumperformance point, and share similarly high energy usage; significant savings only came with large performance penalties, or capital-cost increases. Equipment that consumes half the typical power, while only incurring a performance penalty of around 20%, is now available. This equipment often has the lowest full life-cycle cost. Significant power savings on the smartphones to provide VoIP service over Wi-fi.1 Generalised per-seat ICT power consumption (kWh/seat-year) Trouble in the computer room The greatest excesses of the ICT department take place in the computer room. The worst sins are (in rough order of significance): l Annual average equipment utilisation of less than 10% (frequently less than 2%) l Equipment procured without factoring power use into the full life-cycle cost l Old equipment retained after it is no longer efficient to operate l Everything left on 24/7/365 l Inefficient cooling systems, with no hot-aisle separation and overly cool set points. Business as usual Good Enthusiastic 0 200 300 400 500 600 700 Relative annual electricity consumption (source: SEOSS in-house data, and modelling) kWh/seatyear based on single-shift, general-office users the Nottingham Trent University datacentre7. Similar technology has been deployed by large organisations, such as Facebook8. UP IN THE CLOUD 100 Desktop computer and display Desktop telephony Desktop other Computer-room servers Computer-room telephony Computer-room other equipment Computer room cooling and power Computer room cooling Computer room cooling falls within the remit of the building services engineer. The accepted practice of improving efficiency by preventing hot exhaust air from mixing with cool intake air known as hot and/or cold aisle containment is often neglected, especially in smaller installations. Simple solutions, such as foam blocks to plug gaps in equipment racks, offer rapid returns on investment. eadE RMOR desktop can be realised using mobile technology for example, laptop computers with accessories to improve ergonomics, or desktops built with components designed for mobile devices. Systems that return rapidly from a very-low-power sleep allow further savings. The amount of information that can be imparted to a user is largely determined by the number of pixels on the display. Smaller screens usually result in lower power consumption, since the total illumination is reduced. Changing a display for one with more pixels is normally more power-efficient than adding a second monitor. Automatically varying screen brightness in response to ambient light can reap further savings. Voice Over Internet Protocol (VoIP) phones are now used in most organisations. Power consumption varies considerably between models, and the lifetime electricity bill of a phone can easily exceed its purchase cost. Its nearly always possible to retrofit software that powers-off some or all phones while the building is vacant, saving 60%. More radical solutions include software phones on users computers, and using The biggest sins Other issues require action by the ICT department, particularly underuse of equipment. Cloud service providers aim to exceed 50% average utilisation, but few other organisations exceed 5%. Ideally, software would be installed to monitor the use of equipment throughout the computer room; this will also aid ICT planning and troubleshooting. However, the profile of computer equipment electrical power usage will give a useful preliminary indication: modern servers use about half their maximum power when idle, and in nearly all organisations utilisation is less than 10% at some point during the week. Another simple telltale sign is the ratio of computer-using office occupants to computer-room server computers. For most organisations, ratios of 40:1 are attainable; ratios of 10:1 are common. This is a great opportunity for large reductions in both peak and annual computer-room power usage. The most significant gains in Conservatism and simplified control systems frequently set the computer room at 19C year-round. While optimal air intake temperature may be complex to model, and will vary throughout the year, simply adopting a set point of 26C will create savings of 5% to 10% and much more where free cooling is available. On-site tuning is advised.2,3 ICT managers frequently lower set points to reduce the perceived risk of hard-disk-drive failures. Evidence is sparse, but a large-scale study, published by Google in 2009,4 showed the minimum drive failure rate occurred at operating temperatures of around 40C. Other studies have shown poor correlation, except for occasional makes and models5. The UK climate is well suited to computer-room cooling with little or no refrigeration examples include the University of Cambridges Department of Engineering6, and utilisation can often be made using systems that dynamically allocate compute workloads and associated data across physical computers. These private cloud systems are often the same as those used by commercial cloud providers. For example, the Nasa OpenStack project. attention to the capital and runningcost savings if people join together to address the responsibility gap. Unless this can be closed, significant improvements are unlikely. CJ The responsibility gap The cost of ICT power consumption is seldom borne by ICT departments, but forms a crucial lever to reduce the ICT footprint. Lower capital expenditure on electrical and cooling services may provide the impetus for management to close this responsibility gap. Cooperation of ICT departments becomes more likely if the change can be structured to reward them for example, with a share of any savings realised. Reducing the ICT footprint can then become an interesting opportunity, not a distracting annoyance. One organisation successfully doing this is the University of Cambridge9. In many buildings, the energy use of ICT systems can be reduced radically (Figure 1). However, this potential is seldom realised. This creates a great opportunity for building services engineers to draw managements REFERENCES: 1 The IP Phone power bill can be high, No Jitter, 2010 ubm.io/1BKllyZ 2 Data Center Operating Temperature: The Sweet Spot, Dell 2011 bit.ly/1DFMhov 3 Thermal Guidelines for Data Processing Environments ASHRAE 2011 4 Failure Trends in a Large Disk Drive Population, Eduardo Pinheiro, WolfDietrich Weber and Luiz Andre Barroso, Google, 2007 bit.ly/1wBJxmX 5 Hard drive temperature does it matter?, Blackblaze bit.ly/1GLboT1 6 Evaporative cooling: Engineerings second server room, University of Cambridge, Engineering Department bit.ly/1BPXyxR 7 University Challenge, October 2011, page 44, CIBSE Journal bit.ly/1J3yPeh 8 A critical appraisal of the Facebook design by James Hamilton, vice president, Amazon Web Services bit.ly/1uN2nCf 9 The Electricity Incentivisation Scheme, University of Cambridge bit.ly/1BT4dKn TIM SMALL is a director at SEOSS. More information at seoss.co.uk/ICT-power "